Let’s go

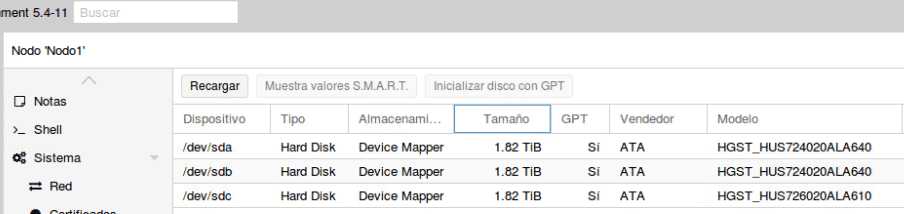

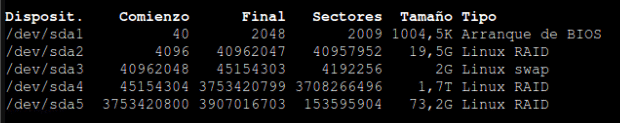

We like challenges and as is well known, Proxmox does not recommend software raid for its platform because the performance is not good. And this is true but only half true, after many tests setting up a 3-node Proxmox platform with GlusterFS 3 local disks of 2 TB 7k in Raid 5 for the Gluster brick partition and in raid 1 for the Proxmox system partition.

As you can see, the disks are 7k and if we add to that the fact that the data will be replicated on 3 servers with a 1Gb connection, it is difficult to have good performance. That is true, but only if we follow recommendations that we will find on the Internet and that are logical. In fact, we started there, looking at recommendations and testing. Once we had exhausted all the recommendations and seeing that the machines had a really horrible writing performance of 10mb/s no matter what we did, we decided to do an analysis from scratch and configure according to our criteria and calculations.

We are not going to explain how to install Proxmox with software raid since there are many good manuals on the Internet, just as we are not going to explain how to install Gluster, only the parameters that we have touched on, even so if you want a manual please contact us and we will be happy to make time to do it.

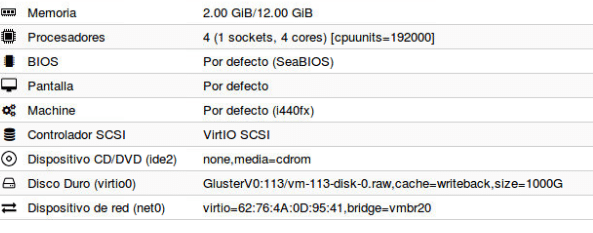

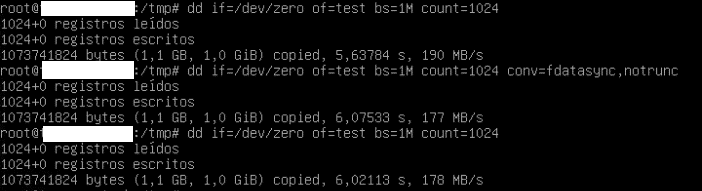

First of all, based on theory, the disk format chosen for the machines is initially raw because it is faster, it has no associated metadata although the advantage of taking a snapshot is lost. The choice also depends on the purpose or, for example, using qcow2 for the system disk and raw for the data disk. In our case we are looking for performance and raw is “theoretically” faster. Once the machines were created with raw format with VirtIO Block bus (the one that has given us the best performance) we began to do tests with write back as cache and without it, reaching

Not bad for having many machines sharing datastore with 7k disks

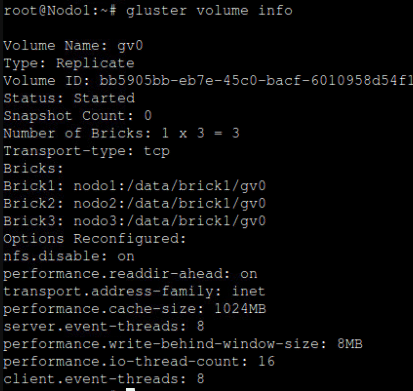

To get these values, which have been the maximum after many tests, we configured the following parameters in Gluster

With Gluster volume info all we can see all the parameters but we are interested in it without all because it shows us the ones that have been touched and are not there by default starting from where it says Options Reconfigured, we are not going to go into detail explaining them one by one because there is already a lot of information on the internet and more or less with the name we can identify their purpose.

To configure these parameters, use:

gluster volume set <VOLNAME> <OPT-NAME> <OPT-VALUE>

Example: gluster volume set gv0 performance.cache-size 1024MB

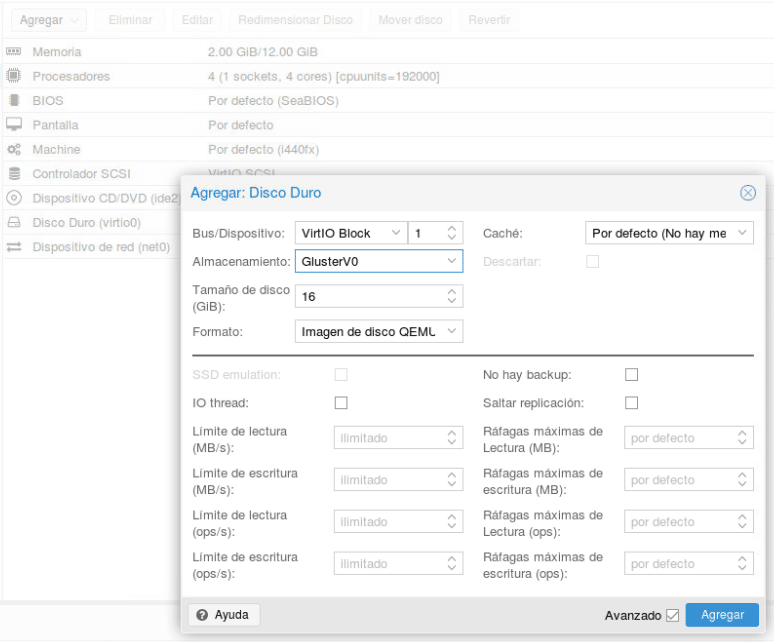

Even so, we weren’t sure about the performance it was giving us, although it wasn’t bad at all, but we had the feeling that it wasn’t using the cache correctly, it was using it but not everything we had set it to and as after many tests changing drivers, parameters, etc… we weren’t getting much more, we decided to try qcow2 by transforming the disk of said machine into qcow2 (Make a backup first)

We turned off the machine and added a second small qcow2 disk so that it wouldn’t take long, when we do the conversion and reconnect it it will take the size of the disk we have.

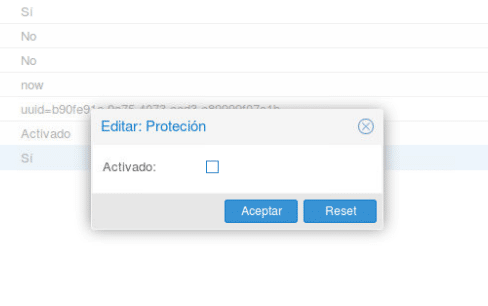

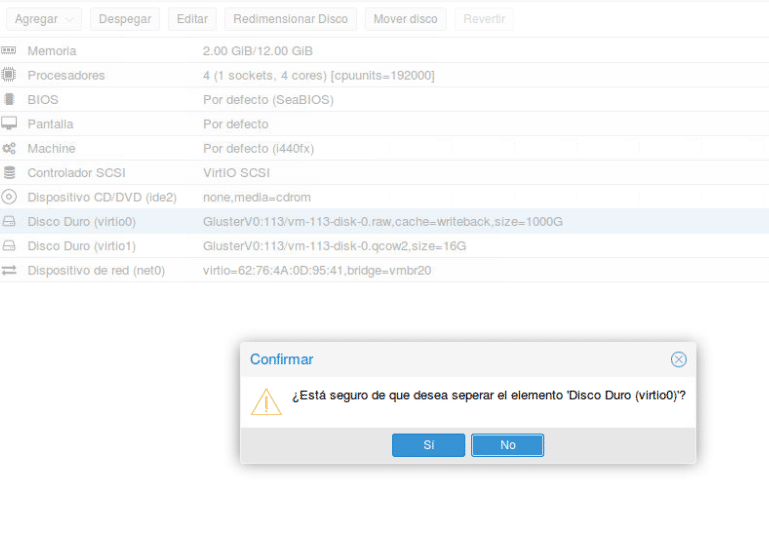

We remove the machine protection in options

We take off both discs

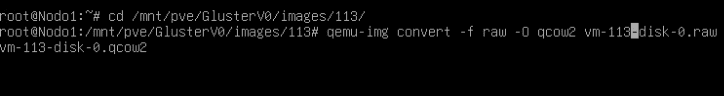

We go to the console of one of the nodes and we locate ourselves in the directory of the machine within the Gluster brick and we convert the disk with qemu-img convert -f raw -O qcow2 disco.raw disco.qcow2

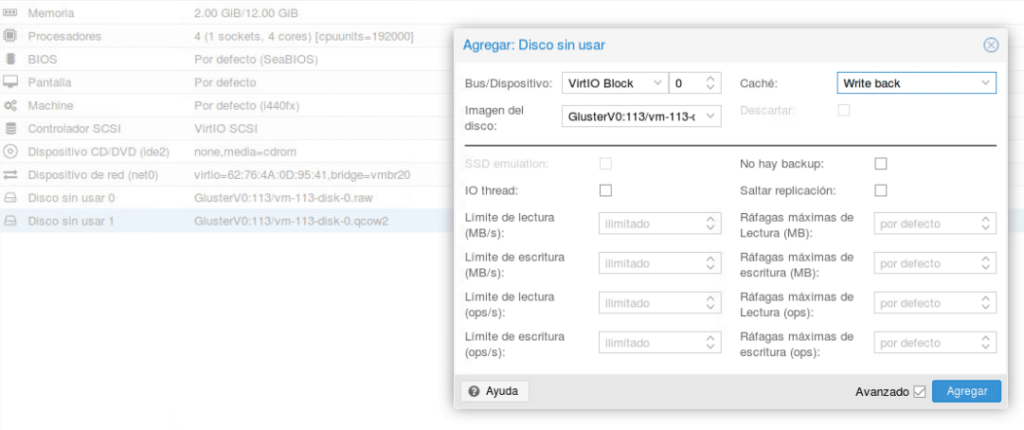

Depending on the data on the disk, it will take more or less time and once finished we paste it again by editing said disk that will appear unused in the hardware tab, activating write back as shown in the image.

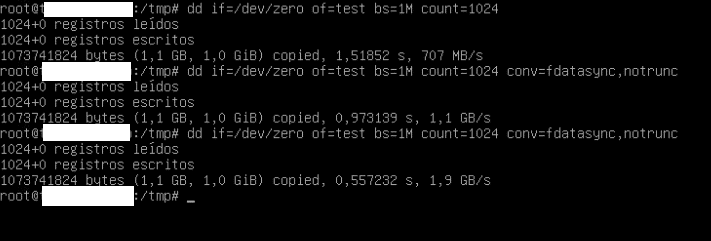

Once done we start the machine and perform the same tests

As you can see now, if you are making good use of the cache, the performance has increased dramatically. Once you have verified that the machine is working perfectly, you can delete the raw disk that will appear unused in the hardware options and re-enable the protection.

This change has not only improved the performance of the machine but also the performance of the hypervisor, since when we had machines with raw type, the proxmox I/O delay graphs could shoot up to 50% while now it is at constant values that are good for our scenario, not exceeding 6%.

Therefore, we can say that raw in Proxmox with GlusterFS and software raid is not the best at all, it is a bottleneck.

And with 3 nodes without disk cabinets we have achieved a platform with decent HA.

tl.

Thank you for reading our posts.

FAQS

Proxmox supports both, but performance depends on the processor model and generation. AMD EPYC and Ryzen CPUs offer better per-core performance compared to some older Xeons. Also, it is important to enable virtualization in BIOS (VT-x on Intel and AMD-V on AMD) for best performance.

It is recommended to use SSD or NVMe disks instead of traditional HDDs, take advantage of RAM cache if using ZFS, and choose the appropriate format for virtual disks (e.g. qcow2 for snapshots or raw for better performance). Speed can also be improved by distributing the load across different disks with RAID or Ceph.

Proxmox can run with 2GB of RAM but little else will do. For efficient virtualization at least 16GB is recommended, especially if using ZFS or running multiple VMs. If using Ceph calculations need to be made based on your available hardware and number of OSDs.

If high availability and scalability are desired, a multi-node cluster (minimum 3) is the best option, as it allows VM migration without interruption and load distribution. However, for small or low-budget installations, a single well-configured node may be sufficient.